10 real world applications of AI across industries

Artificial Intelligence is much more than a buzzword, it's a powerful force that's shaping the world of tomorrow. From manufacturing to healthcare, AI has rapidly become an integral part of our daily lives, reshaping how we interact, work, and live. With each passing day, AI algorithms continue to evolve and mature, unlocking new possibilities, streamlining processes, and enhancing our experiences in ways we never thought possible.

In this blog post, we'll show 10 specific examples of how AI is reshaping different industries. Each section in this post will cover a specific domain, highlighting both the challenges it faces and how AI is helping overcome these hurdles. Additionally, we will briefly describe some of the most popular algorithms in each domain, as well as how specific benchmarks are used to evaluate their performance.

Without further ado, here are our 10 picks for some of the most popular applications of artificial intelligence.

AI in manufacturing: Using segmentation models to streamline inspection

The field of engineering has always strived for automation, trying to streamline labor intensive processes to increase productivity and maximize profits. This relentless pursuit of efficiency has led many early adopters to try to incorporate machine learning algorithms into their processes.

One of the leading adopters of automated processing technology have always been high tech manufacturing plants. Traditionally, the manufacturing lines in these plants would incorporate rudimentary computer vision algorithms that performed simple measurements. Nowadays, with the popularization of deep neural networks, high tech manufacturing plants have started incorporating deep learning solutions that further streamline their inspection processes.

A specific example of how manufacturing plants are using intelligent inspection algorithms is the quality control. Quality control inspection algorithms are trained on thousands of images showing both good quality and defective items and, using this information, they learn to identify the visual cues that distinguish a defective product from a good one. Once trained, these algorithms can be deployed to analyze new images with a high level of accuracy and speed. Additionally, these systems can operate around the clock with no breaks and maintaining the same level of accuracy.

Typically, the automated quality control analysis is tackled using either classification, or segmentation models. One of the most popular segmentation models of the last decade has been U-Net, a encoder-decoder convolutional network that first compresses spatial information into high dimensional feature maps and then expands them back to the spatial domain to generate the segmentation map.

Additionally, researchers have turned to benchmarks like KollertorSSD to evaluate the performance of image segmentation models for quality inspection. Here, segmentation networks like U-Net have achieved human-like performance, scoring an average precision of over 96%. This result indicates that the model accurately labeled over 96% of the pixels, successfully categorizing them as either defective or non-defective surfaces.

AI in healthcare: Efficient retinopathy screening using image processing

Healthcare is another field where innovation consistently thrives. Classical technological breakthroughs such as the Fourier transform paved the way to create complex 3D imaging systems such as MRI scans. Nowadays, the field of healthcare continues to embrace technological innovations and has started incorporating AI models to further revolutionize its services and improve the lives of patients.

Diagnostic tests typically generate complex data, often in the form of images that require expert interpretation. Specialists undergo years of dedicated training to decipher these images, but time constraints often limit their ability to delve into each case in detail. This is particularly evident in conditions like diabetic retinopathy.

Diabetic retinopathy, a specific type of retinopathy caused by damage to the blood vessels in the retina, is a common complication of diabetes and a leading cause of blindness among working adults. With the number of individuals diagnosed with diabetes projected to reach 600 million by 2040, a third of whom are expected to develop diabetic retinopathy, the need for efficient and accurate diagnosis becomes increasingly critical.

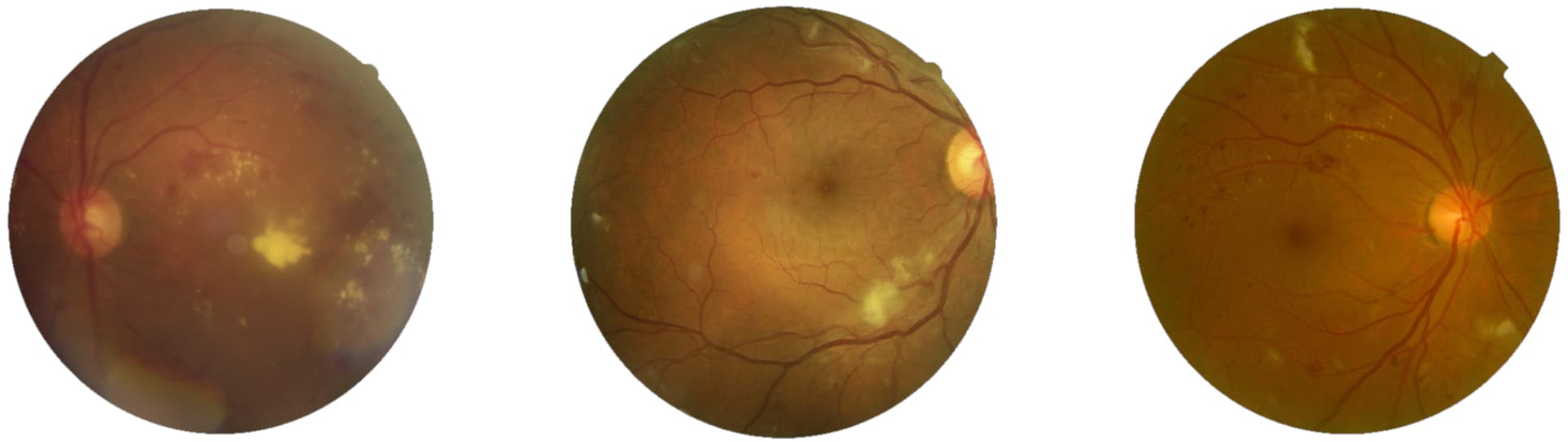

To prevent diabetic retinopathy regular retinal screening is encouraged, and this is precisely where AI can help. Instead of relying on highly trained specialists, who could be overwhelmed by the workload, the fundus images acquired in the screening sessions can be analyzed using machine learning models. Some examples of these fundus images can be seen in Figure 1.

Machine learning models can help analyze fundus images in three main ways. First, there is automatic detection, where a binary machine learning classifier tries to identify the presence of any retinopathy lesion without specifically quantifying them. Secondly, machine learning models can also be used to categorize the different stages of the disease, grading the severity of retinopathy in accordance with the ICDR scale. Lastly, an even more elaborated approach of using AI to help in retinopathy screening is to generate pixel-level maps to precisely mark typical retinopathy lesions such as microaneurysms, soft exudates, hard exudates, and hemorrhages.

When using binary machine learning classifiers to detect retinopathy, the current state of the art models achieve an accuracy of over 92% in large scale datasets like DDR, which boasts a total of 13671 fundus images.

AI in education: Crafting personalized learning paths to improve academic success

Despite having brought considerable changes in many aspects of our society, the application of AI in the field of education is still in an early stage. Historically, the education sector has lagged behind in terms of technological adoption when compared to fields like finance, healthcare, or engineering. These sectors have rapidly integrated cutting-edge technology into their core operations, leveraging AI for predictive analysis, personalized customer experiences, or improving health outcomes. Meanwhile, education, a sector just as vital, has been somewhat slower in its embrace of these advancements.

However, this is starting to change. The transformative power of AI can revolutionize the way learning is delivered and received. By harnessing the capabilities of AI, we can provide a learning experience that is deeply personalized to each individual student. It's not just about presenting information in a tailored format; it's also about making learning an active and practical process. This ensures that the knowledge gained is not just theoretical but can be applied in real-world scenarios.

A good example of how AI can be integrated into education is the Korbit learning platform. This innovative e-learning platform uses machine learning, natural language processing, and reinforcement learning algorithms to provide a personalized and engaging learning experience. When a student first engages with the platform, an initial assessment is conducted. Based on this evaluation, learning objectives, and time availability the platform devises a learning path tailored specifically for the student.

Within this learning path, students are given problem-solving exercises. They are presented with questions, and they can try to solve them, ask for help, or skip them. After each attempt at solving an exercise, a natural language processing algorithm checks the answer against the expected solution. If a student's answer is wrong, the AI system provides tailored educational aids, like hints and explanations, chosen by RL models based on the student's profile and attempts.

To assess the efficacy of their method, researchers at Korbit designed a head-to-head study where they measured the learning gains of students utilizing either a conventional MOOC platform or Korbit's personalized learning path. After collecting data for a total of 199 students, their analysis revealed that those who were enrolled in the Korbit platform experienced learning gains between 2 to 2.5 times greater than those on the MOOC platform.

AI in cybersecurity: Going beyond signatures and heuristics for malware detection

The race between cybercriminals and cybersecurity experts has been a constant tug of war for decades. As the techniques used by cybercriminals evolved, leading to more advanced malware that can cleverly change its appearance while keeping its harmful potential, cybersecurity experts had to adapt and find new ways to counter these threats.

Traditionally, malware detection was performed using “signatures”, special strings of code unique to each piece of malware. However, as malware evolved it became more difficult to find out effective signatures that effectively identified it. A first step to overcome this issue, was to create heuristic engines that would look for specific behaviors that would indicate malicious intent. However, this was usually a rule based approach where experts had to manually define and input these rules based on their knowledge and experience. Each rule was a kind of “if-then” statement that flagged certain behaviors as potentially harmful. However, the main drawback of this approach is that its effectiveness largely depended on the quality and comprehensiveness of the rules defined by the experts.

To overcome the limitations of both signature and heuristic engines, experts started exploring how machine learning could help identify malicious patterns by automatically learning from a database of known malware. Popular databases such as EMBER include useful information like the distribution of different values (Byte histogram) in a file, the information about text strings (String information) found in the executable, and the locations of important files needed to run the software (Data Directory).

Using this information, different machine learning algorithms such as support vector machines, random forests, or deep neural networks have been used to perform automated malware identification, achieving a detection accuracy of up to 95% in the aforementioned dataset.

AI in agriculture: Automated pest monitoring using detection models

Agriculture is the foundation of our global food supply, yet it faces a significant adversary: insect pests. These pests are responsible for around 30% of the world’s agricultural production losses every year, so controlling them has become a crucial aspect of intensive agriculture. Over the years, the use of chemical pesticides has proven to be the most profitable solution for crop protection against these tiny invaders, however, their continued use has led to poisoning of different organisms and other health concerns.

In order to try to avoid the excessive use of pesticides, professionals started monitoring the pest populations in their greenhouses and fields. This approach allows farmers to apply pest control measures only when necessary, thereby reducing the overall volume of pesticides used. The most basic approach for carrying out this monitoring is to place sticky traps in strategic locations within the fields and greenhouses. Once placed, an expert can check them every few days, and manually identify the insects that have been trapped. Figure 2 shows different captured insects.

Sticky traps have proven to be an efficient method for monitoring pest populations, but the manual identification and enumeration of insects caught can be excessively time-consuming. To address these issues, researchers have engineered autonomous monitoring stations incorporating sticky traps placed in front of cameras. These cameras are programmed to capture images of the sticky traps at regular intervals and automatically upload them for analysis. Once uploaded, machine learning algorithms such as YOLO can be used to both identify and count the captured insects.

Naturally, optimizing the performance of the machine learning models is critical, and researchers have turned to large scale datasets such as Pest24 to fine tune them. Pest24 is a comprehensive dataset containing more than 25000 annotated images retrieved from automated traps. Despite all the efforts that have gone into refining machine learning models, pest detection has proven to be a challenging problem where the results of automated analysis are still far from ideal. Specifically, the YOLOv3 model achieved a mean average precision of 59%, a useful achievement, though there is still substantial room for improvement.

AI in banking: Fighting credit card fraud using tree classifiers

Credit card fraud is an issue that continuously plagues the world of finance. In 2016 alone, fraudulent transactions using credit cards amounted to a staggering €1.8 billion. There are several types of credit card fraud such as stolen card fraud, fraud due to insolvency, or fraud from false card applications. However, the most common type is likely the unauthorized use of credit card information to make purchases.

Using the card’s details like number, CVV, or expiry date, fraudsters can quickly make online purchases before the cardholder becomes aware that their card details have been stolen. Due to the sheer volume of daily card transactions, and how quickly the card information is used once stolen, transactions have to be automatically checked using real-time systems capable of identifying suspicious behavior. Because of this, companies have started researching how machine learning can recognize which transactions are fraudulent and which are not.

In the area of credit card fraud detection, tree-based machine learning algorithms like decision trees, random forests, or gradient boosted trees are popular. These algorithms break down the decision process in a series of feature-based questions just like a tree-based flowchart. Specifically, these features are often things like the transaction amount, location, or merchant category.

One of the most popular credit card fraud detection datasets is the ULB Credit Card Fraud Detection dataset. This dataset contains a total of 284807 transactions, out of which just 492 are identified as fraudulent. This creates a highly imbalanced scenario where just 0.17% or the data is of the targeted class. Because of this, researchers often use metrics like precision and recall that are better suited for these scenarios.

According to the latest research on credit card fraud detection using machine learning, tree based models like random forest are capable of achieving a recall rate of about 81% on the ULB dataset, meaning that the model correctly identified 81% of the fraudulent transactions.

AI in retail: Using recommender systems to boost e-commerce sales

In the realm of modern retail, e-commerce has emerged as one of the leading ways consumers purchase goods and services. Among its many benefits, three pivotal factors have driven its exponential growth. First, the convenience of digitized retail browsing has enabled the exploration of vast product databases from any internet-connected device. Second, the integration of robust and secure digital payment gateways has ensured transactional safety, increasing consumer confidence. Third, the global reach of e-commerce platforms, unrestricted by geographic boundaries, has significantly broadened the consumer base.

In order to maximize the profit potential of online businesses, both large and small e-commerce sites have been integrating recommendation engines to further drive sales. Such is the importance of recommendation engines that, in the case of the global e-commerce giant Amazon, their proprietary recommendation engine is responsible for generating around 35% of their total revenue.

One typical way these recommendation engines work is by using collaborative filtering algorithms. These algorithms are engineered to anticipate the user's preferences based on the preferences of a collective user group. In essence, these algorithms draw from the shared behaviors and preferences of similar users to suggest relevant products. To understand it more clearly, consider an everyday scenario of selecting a new product to buy. More often than not, we turn to our friends for their suggestions, especially those friends who share our taste, and we inherently trust their recommendations because they understand our preferences. This is precisely how collaborative filtering algorithms rank e-commerce products to be advertised to users.

A popular benchmark in the field of recommendation systems is the Amazon-book dataset. This dataset is made up of thousands of book reviews contributed by users, with the ultimate objective of suggesting new books that align with a user's interests, based on their existing reviews. In terms of performance, the current state of the art shows a top-20 recall rate of approximately 7%. This implies that, out of the top 20 books suggested by the recommender system, 7% are actually relevant to the user's preferences, as indicated by their book review history.

AI in recruiting: Using transformers for personalized outreach

The recruitment landscape is yet another area heavily revolutionized by technological advances. Traditional recruiting methods such as writing a one-size-fits-all message and sending it to hundreds of candidates are now becoming obsolete with AI text generator models such as transformers.

These transformers are trained on vast amounts of text sequences, and learn a language model that can both understand, and generate text. Since much of the information used to train them has been scraped from the internet, transformer networks are particularly good at parsing the information displayed on websites such as LinkedIn. This capability of processing text in its raw form is particularly beneficial in the recruitment landscape, where potential candidates often have varied and unique ways of presenting their skills, experiences, and aspirations.

Once a potential candidate's profile is analyzed, transformers can generate personalized messages to engage with them. You can ask models like ChatGPT to “Generate a personalized message to recruit this candidate. Here is the public information on LinkedIn: [Paste the information here]”. ChatGPT will generate a personalized message based on the candidate's background, interests, and the specific job role for which they might be a good fit. For example, a message to a candidate with a background in data science might highlight the innovative projects your organization is undertaking in the field, or how their unique skill set aligns with your company's needs. These personalized messages can significantly enhance engagement rates as candidates feel acknowledged and valued when they receive a message tailored specifically for them.

When recruiters want to further automate the recruiting process, they can turn to fine tuned transformer networks to automatically generate dialogue messages. Here, transformer models like BERT are capable of formulating responses that are influenced by the candidate's replies. A popular benchmark for evaluating these dialog transformers is the Persona-Chat dataset, which includes over 10000. In this benchmark, state of the art models achieve an F1 score of over 21%, meaning that there’s over a 20% overlap between the dataset and the generated messages.

AI in fashion: Revolutionizing online shopping with virtual try-on

In a decade marked by an unprecedented explosion of AI applications, the fashion industry is not exempt from this disruptive technology. Nowadays, online shopping is far from a novelty, the popularization of the internet has opened a wide range of possibilities, and retail stores have embraced this technology to reach a global audience. However, whenever you are browsing through an online store, you may not be sure if a piece of clothing may suit you. Now imagine that you can turn on your camera, upload a picture, and see how it would look on you without ever leaving the comfort of your home. This virtual try-on system may just be the perfect ally in a highly competitive online fashion market.

Virtual try-on systems use artificial intelligence to create an image of a customer wearing a specific clothing product, using just a picture of the customer, and a picture of the product. The machine learning model will try to generate a new image where the pose of the customer is preserved, but is now wearing the selected product. Additionally, these systems will try to preserve things like lightning conditions, background, self-occlusions, or other details present in the original picture.

One of the most popular benchmarks for this kind of models is the VITON dataset. This dataset has over 10000 samples, where each sample has a frontal image of a person, a target garment, and the same person wearing that garment. This kind of models can be tricky to evaluate and, besides a benchmark, researchers have to carefully design a metric to measure their performance. In this case, a popular metric is the FID score which uses the internal activations of a pretrained Inception network to compare how similar two images are, the closer the activations the more similar the images are. Here, the top scoring model achieves a FID lower than 10 and, since this metric can be hard to grasp, Figure 3 shows images generated using one of the best performing models.

AI in advertising: Optimizing marketing campaigns through click-through rate forecasting

Total digital ad spending is expected to reach $455.3 billion in 2023. Of that, 55.2% will go to display advertising and 40.2% will go to search. With digital formats commanding the lion’s share of ad dollars, marketers need to continuously optimize the effectiveness of their campaigns.

In order to measure the effectiveness of their campaigns, marketers often resort to metrics like the click-through rate. This metric is calculated by dividing the number of clicks by the number of impressions and expressing the result as a percentage and, according to recent studies, the average click-through rate in Google AdWords across all industries is 3.17%

To optimize the return on investment, marketers will often carefully design their marketing campaigns to maximize the click through rate, and this is where AI comes into play. Rather than solely relying on their expertise, which sometimes can be limited, they can turn to machine learning models to predict how well a marketing campaign will work.

The machine learning models tuned to forecast the click-through rate will often be trained and evaluated on benchmarks like the Criteo dataset, a benchmark that includes over 45 million samples. Each sample in this dataset contains 13 numerical features and 23 categorical features, along with a click-through rate score for the corresponding ad.

One of the best performing machine learning models for the Criteo benchmark is a two-stream multilayer perceptron model called FinalMLP. When evaluated using the area under the receiver operating characteristic curve, commonly called ROC curve, this model achieves a 81% score. This shows how well the predictions and dataset annotations correlate using a sequence of click-through rate thresholds to differentiate between “positive” ads, those above the click-through rate threshold, and “negative” ads, those below the threshold.

Conclusion

The integration of Artificial Intelligence across industries represents a big step forward in the optimization of efficiency, precision, and comfort. From the automation of labor-intensive processes in engineering to the enhancement of diagnostic capabilities in healthcare, AI is reshaping our world and redefining our future.